BRAG - Brain Retrieval-Augmented Generation

Exploring how far context can go—files, sensors, and maybe even minds

This week’s idea came to me while waiting at Bengaluru Airport for my flight back to London after a work trip with a dear colleague Mr.

.The problem we were discussing is this: today, one of the least attractive parts of using GenAI is providing it with context. For every domain where we want to integrate GenAI, we are forced to spell out the specifics of our query and all the background around it—so the model can “understand” the variables of the little piece of the world where our problem lives.

To solve this, engineers have quickly developed a whole toolbox of techniques to capture and manipulate “context” data and feed it efficiently into the model: fine-tuning, RAG and its many variants, prompt engineering, and so on.

With this in mind, I had a few thoughts. The most instinctive one—probably most relevant to day-to-day work—is the concept of a Context-Wallet. But before we get there, let me define what I mean by context.

What I Mean by Context

Think of context as everything that surrounds a specific domain of work or life.

For example, let’s say I’m working on a project called Project Oasis.

The context here is all the data the project generate and exchange daily:

Emails

Project documentation (PDFs, diagrams, spreadsheets, Jira, Confluence)

Call transcripts

Anything else related to the project’s execution

This is the “world” that gives meaning to my working queries.

The Context-Wallet

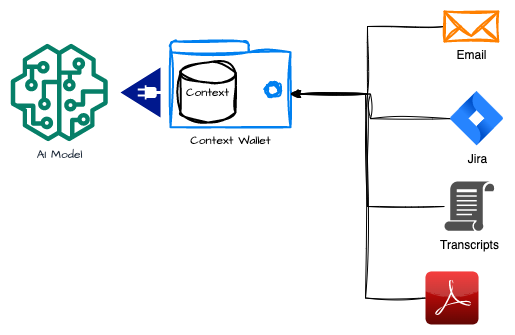

Now imagine a portable, personal, and private repository—a Context-Wallet.

Think of it as your digital memory bank: all the context you generate through work and life is stored here, under your control. Instead of starting from zero every time you interact with an AI model, you simply “plug in” your wallet. The model gains instant access to the relevant background, while you retain full ownership of the data.

What makes the Context-Wallet powerful is not just storage, but the way it evolves:

Continuously Updated

Every interaction you have with an AI model enriches the wallet. If you refine a prompt, upload a document, or correct an answer, those updates feed back into your context automatically—so the next interaction starts smarter than the last.

Multi-Source Integration

You can add new data directly (like a PDF, a transcript, or meeting notes) or link it to external systems (email, project management tools, cloud drives). Instead of scattering your “knowledge exhaust” across apps, the wallet unifies it into a single stream.

Automatic Enrichment

The Context-Wallet doesn’t just collect raw data; it organizes, tags, and structures it. Think of metadata: time, project, participants, domain. This way, the model doesn’t just see “a file” but knows this document belongs to Project Oasis, created last week, related to supply chain planning.

Portability & Interoperability

The wallet is not bound to a specific vendor. You can use it with any AI model—Bedrock, OpenAI, Anthropic, open-source—without having to rebuild your prompts or retrain from scratch.

Privacy & Ownership

Unlike handing over your data to a SaaS vendor’s closed system, the Context-Wallet belongs to you. You decide what’s shared, with which model, and for how long.

This way, the Context-Wallet becomes a bridge: it remembers your work and history like a trusted colleague, but always under your control, ready to be plugged into any model you choose.

The Immersive Context-Wallet

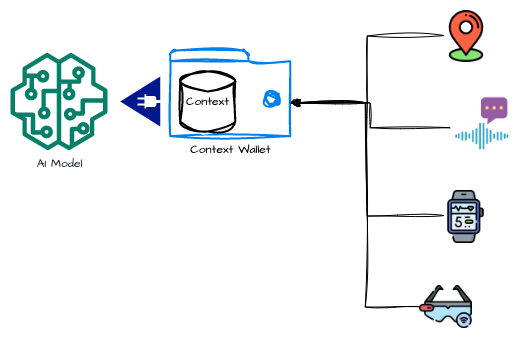

Taking the concept one step further, imagine an Immersive Context-Wallet.

It builds on the same foundation as the Context-Wallet—your private, portable repository of knowledge—but now it also integrates real-time signals from your lived experience. Instead of just collecting documents, transcripts, and project data, it layers in sensory and biometric inputs, giving the AI a richer, more dynamic understanding of you in the moment

Here’s what that looks like:

Visual & Audio Streams

A continuous feed from smart glasses, AR devices, or your phone’s camera and microphone. The model doesn’t just read your notes—it sees what you see and hears what you hear: meetings, presentations, environments, even ambient context like noise or interruptions.

Body & Health Data

Wearables like a smartwatch or health band provide biometric signals—heart rate, breathing patterns, blood pressure, sleep cycles. The AI can correlate this with context (e.g., noticing that your stress level spikes in specific types of meetings, or your energy dips mid-afternoon).

Location Awareness

GPS data from your phone or watch adds situational grounding. Are you at the office, commuting, in another country? This location layer can unlock context-specific recommendations, like surfacing the right documents when you step into a client site, or adapting output to match local conditions.

This Immersive Context-Wallet creates a living, breathing timeline of your world. Instead of static files and prompts, the AI has a dynamic feed of your environment, state, and actions.

The result is a system that could:

Anticipate needs before you ask (“You’re about to present—here’s the latest version of the deck”).

Adjust responses to your physical or emotional state.

Seamlessly connect the dots between what you’re experiencing and the knowledge already in your wallet.

Of course, this opens massive questions about privacy, ownership, and boundaries. Who controls this data? How is it protected? What do you choose to share with an AI model, and what stays private?

But if solved responsibly, the Immersive Context-Wallet could be the bridge between today’s transactional GenAI prompts and a truly continuous AI companion—one that doesn’t just understand your words, but your world.

BRAG – Brain Retrieval-Augmented Generation

But the final monster of this discussion has been

BRAG – Brain Retrieval-Augmented Generation.

If the Context-Wallet is about carrying our digital traces, and the Immersive Context-Wallet is about layering in signals from our lived experience, then BRAG asks a more radical question: what if the “wallet” disappears entirely, and the interface to AI is our brain itself?

In this thought experiment, a Brain Programming Interface would sit between our neural activity and the model. Imagine electrodes, or perhaps some future non-invasive sensors, continuously reading the firing patterns of neurons. A converter layer would translate those signals into embeddings or structured representations, while an API gateway would pass them directly into an AI model. The loop could even work in both directions: not only retrieving information from the “brain context” but feeding enriched outputs back, perhaps as visual overlays, auditory signals, or even as direct neural stimulation.

In such a system, the AI wouldn’t wait for prompts. It would already have the raw intent—the beginnings of a thought, the memory being recalled, the pattern of focus or distraction. Retrieval would shift from searching files or scanning context windows to scanning your own cognitive state. Generation, then, would be built on top of your living stream of thought.

And the loop could run in both directions. AI responses wouldn’t need to appear only as text on a screen. They could return as neural signals: a visual overlay projected in your mind’s eye, a soundless voice in your inner ear, or even a subtle shift in mood or focus. At that point, the line between “using a tool” and “thinking with a tool” becomes hard to draw.

Picture a scene: you’re walking into a client meeting. There’s no laptop, no slides, no notes. As you shake hands, the AI is already inside your mental stream—surfacing the three talking points you rehearsed yesterday, reminding you of the last conversation you had with this person, flagging the data you’ll need if a specific question arises. The moment you think “show the chart”, it appears on the shared screen. The AI doesn’t just know your files; it knows your intent, in real time.

Of course, this is sci-fi more than roadmap. While companies like Neuralink experiment with implants for medical purposes, or groups like Precision Neuroscience and Meta explore alternatives, these are narrow and fragile steps compared to what BRAG imagines. The idea here is not to suggest that this path is established, but to entertain the technical contours of what such a system could be if it ever existed.

And that is where the fascination lies: BRAG is less about “when will it happen?” and more about “what would it mean if it did?” A world where context isn’t typed, uploaded, or streamed, but retrieved directly from the mind itself.

As I sit here in Dubai airport waiting for my connection back to London, I can’t help but smile at how these ideas always seem to come when you’re between places. A casual chat on a work trip turned into sketches of Context-Wallets, Immersive versions, and finally the sci-fi leap of BRAG.

I don’t know if any of this will ever exist beyond thought experiments and whiteboards—but that’s part of the fun. It’s a reminder that technology is never just about code or models. It’s about imagination, and the questions we ask when we’re tired, in transit, and letting our minds wander.

Maybe that’s the real “context” that makes AI interesting: not just the data we feed it, but the human moments where ideas spark—sometimes in the middle of an airport lounge.

👉 Until then, I’ll just BRAG about being the first one to coin Brain Retrieval-Augmented Generation.