AWS Bedrock Agent Tutorial: Shopping & Flights Demo

Claude 4.x + Lambda + OpenAPI action groups—build, deploy, and test in one session.

toString() is hands-on AI + architecture for busy engineers. If you want runnable blueprints subscribe—it helps me keep shipping these.

TL;DR: I built a small AI helper on Amazon Bedrock that can either search products or find flights and decide which to use on its own. It delivers price-sorted results with full logging. Today’s build/test cost was about $46 over ~3 hours; ongoing use is inexpensive and controllable. It’s basic (no personal data or memory yet), and the next steps are stock/price checks, a simple UI, a short pilot—and eventually an “alive” agent that learns your preferences.

Why I built this

I didn’t want another slide about agents. I wanted something I could fork this week and I also wanted to test the new AWS Bedrock Agent. So I created a small agent that can search real products across shops and, when the question changes, switch tools entirely (flights).

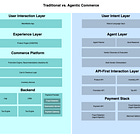

This is a simplistic slice of last week’s idea—“Agentic E-commerce” The point is that the agent decides, not my controller code.

Link to last week’s post:

Repo: https://github.com/skenklok/aws-agent-bedrock-demo

What you get

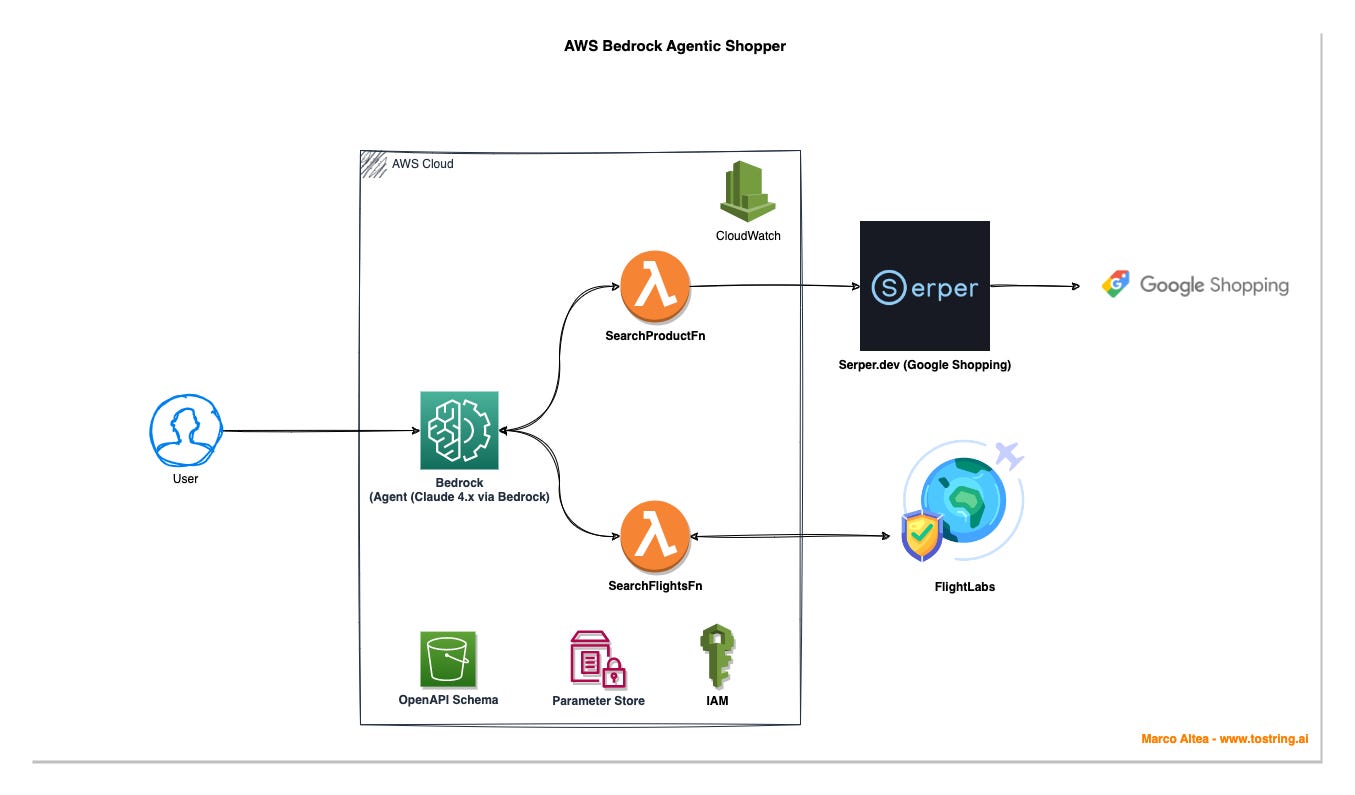

A Bedrock Agent (Claude 4.x via Bedrock’s planner)

Action Group 1 – SearchProductsFn → Serper.dev → Google Shopping results

Action Group 2 – SearchFlightsFn → FlightLabs itineraries

OpenAPI schemas stored in S3, API keys in SSM Parameter Store, logs in CloudWatch

Two different queries → two different tools → one agent

Architecture

How to run it yourself

Readme: https://github.com/skenklok/aws-agent-bedrock-demo/blob/master/README.md

Documentations: https://github.com/skenklok/aws-agent-bedrock-demo/blob/master/DOCUMENTATION.md

Build Logs

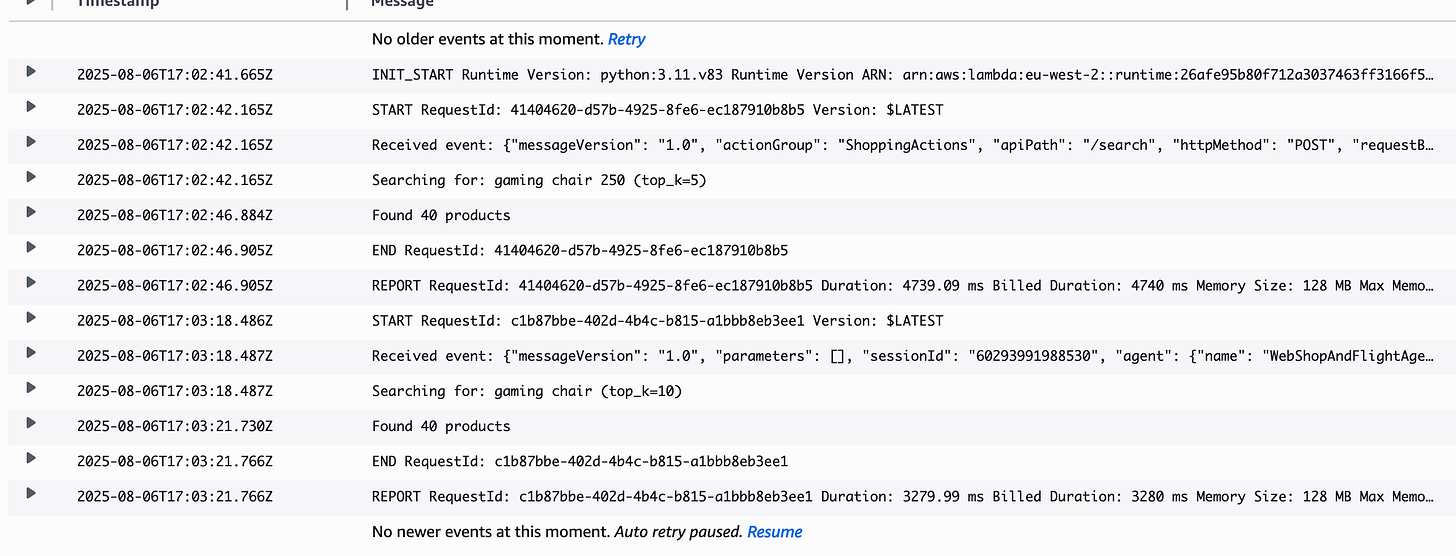

Action Group: ShoppingActions

OpenAPI: POST /search with { query, top_k } → returns [{ title, url, priceUSD, rating, source }]

Lambda: calls Serper.dev’s Shopping endpoint, normalizes, sorts by priceUSD.

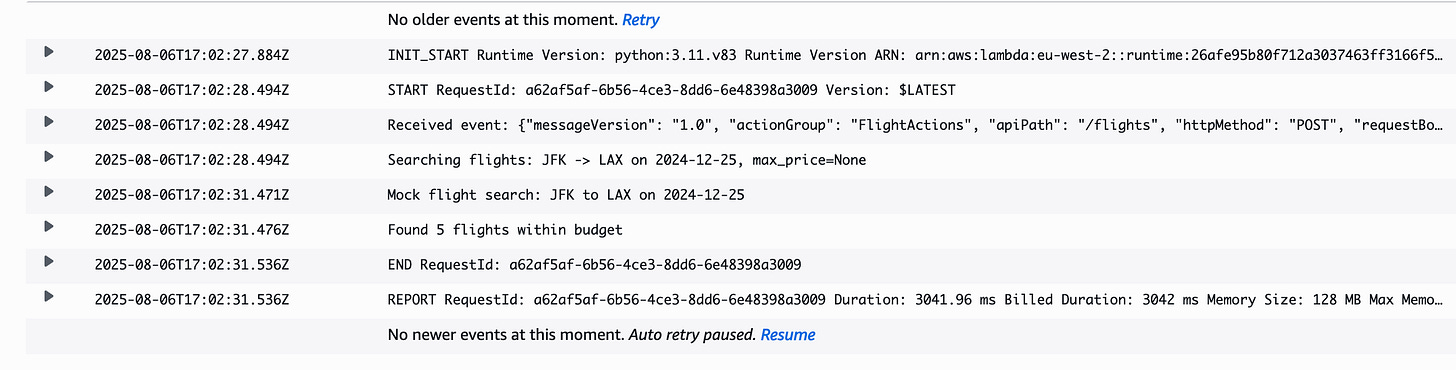

Action Group: FlightActions

OpenAPI: POST /flights with { from, to, date, max_price? } → returns [{ airline, flightNum, departTime, arriveTime, priceUSD }]

Lambda: uses FlightLabs; optional budget filtering.

Agent instructions I used (kept explicit and boring on purpose)

“You can use two actions. SearchProducts to find products with priceUSD, rating, url, source. SearchFlights to find airfare. When the user asks product questions, call SearchProducts; when they ask about flights, call SearchFlights. Always return a JSON array sorted by price USD ascending.”

What makes this “AGENTIC” (and not just a Search wrapper)

The planner chooses if/when to call a tool and which one based on intent. With two tools the choice is visible and auditable in the trace.

No branching controller code on my side; the planner earns its keep.

Vibe Coding: Cost and Efficiency

I paired with Cursor + Anthropic Opus for ~3 hours to build and test this stack.

Today’s usage (from Cursor billing):

claude-4.1-opus — 136 calls — 57,862 input — 50,157 output — $39.22

claude-4.1-opus-thinking — 38 calls — 146,454 input — 21,665 output — $6.97

Total — 174 calls — 204,316 input — 71,822 output — $46.19

It’s not cheap, but Opus removed the back-and-forth on multi-file diffs (OpenAPI + Lambda + CDK + helper script). For trivial edits I’ll drop to a cheaper model.

Where this demo fits

What you’ve seen here is deliberately small: one agent, two tools, a clean branch. It proves the planning loop without burying you in plumbing. Useful—but not alive.

The “alive” version is a different beast. It doesn’t wait for me to type a query; it keeps a short memory of my hard rules (budget, brands I avoid, sizes that fit my setup) and a soft sense of taste (things I click, things I dismiss, how often I say “show me cheaper”, what I write to my friends). It runs a light sweep on a schedule, skims a few allow-listed sources, and turns that into candidates I might actually act on—then shows up with a short, justified list and a one-line reason: “Under €350, IPS, ships this week.”

Day to day it would look like this: Monday morning, the agent does a “morning sweep.” It pulls fresh product and flight data (cheap, incremental calls), merges it with my profile, and scores options with a simple utility function: relevance × price-fit × availability × trust − friction. It proposes three items, not thirty, and cites sources. If I tap ✅ or ❌, that signal updates the profile with time-decay so last month’s rabbit hole doesn’t dominate forever. If I ignore it, it backs off. No nags.

Under the hood it’s still the same pattern you saw in the demo—OpenAPI tools and a planner—but with a loop around it: Observe → Update profile → Crawl → Score → Plan → Act → Explain. The crawler is polite (respect robots, pay where required), the metadata is canonical (schema.org Product/Offer, simple itinerary JSON), and the actions are small and composable: SearchProducts, CheckStock, PriceHistory, Currency/VAT, Flights. The planner chooses which ones to call based on the goal and the profile; I don’t write if/else ladders.

Two guardrails keep it sane. First, cost: daily caps on crawl/API spend, and only call expensive tools when the acceptance probability is high. Second, control: consent per data source (calendar, inbox, none), a visible “why this?” on every suggestion, and one-tap “snooze this topic” or “never show this again.” When anything fails (source down, API throttled), it fails soft—fallback to yesterday’s shortlist with a note.

So yes, today’s build is a slice: reactive, on-demand, easy to fork. The next step is to make it habitual—a small agent that learns my tastes, watches the web on my behalf, and proposes the next useful move, not just the next link.

What that looks like in practice

Signals: hard constraints (budget/size/brands), soft signals (clicks, dismissals), context (calendar, season).

Memory: a profile store (DynamoDB) + a vector store for tastes, with time-decay and a bandit to balance explore/exploit.

Crawler: topic-scoped, incremental, pay-per-crawl when available; canonical metadata (schema.org Product/Offer; itinerary JSON).

Planner: scheduled sweeps (EventBridge) plus on-demand; more tools (CheckStock, PriceHistory, Currency/VAT, Hotels).

Guardrails: data-source consent, spend caps, explainability (“under €350, IPS, 4K”), one-tap “snooze topic”.

Cost hygiene: cache, dedupe, and only call expensive tools when probability of acceptance is high.

That’s where I’m steering this: from “ask and answer” to “watch, learn, propose.”

Lesson Learned

One extra tool makes a big difference—the moment the trace shows a tool switch, the agent feels real.

OpenAPI contracts buy reliability: the planner behaves when schemas are explicit.

$46 to land a deployable, demo-ready agent is a fine trade compared to wrestling mid-tier models all afternoon.

If this blueprint helps, subscribe. I’ll keep shipping minimal stacks you can fork on a weekend, tied to real architecture decisions—not just demos.

Link to last week’s post: