RAG, Vector Database and RBAC

Recently, I talked to a coworker who is working with a client adopting a RAG Architecture. She told me about a technical question that she had. She was creating an AI chat assistant on AWS using a RAG architecture, she was wondering what was the best approach to ensure that the strict permissions from their documentation wiki were kept when the data was moved to a vector database for use by the AI.

I find it an interesting topic to explore. I want to understand the best approaches available and their trade-offs.

I decided to look into this issue more and share my findings. This blog will explain how RBAC (Role-Based Access Control) can work well with RAG architecture in AWS.

Let’s start from the basic

What is a Vector Database?

Vector databases are specialized databases designed to store and query vector embeddings generated by machine learning models.

Vector embeddings are a way of converting non-numeric data, like words or images, into a numeric form so that they can be processed by machine learning algorithms. The resulting numeric forms are vectors in a high-dimensional space, where each dimension captures some features or aspects of the input data. These embeddings help to preserve the contextual relationships between data points in their original forms.

How Vector Embeddings Work

Vector embeddings work by mapping the original data to points in a geometric space. Similar items are placed closer together, whereas dissimilar items are farther apart. This mapping is often learned from large amounts of data using machine learning techniques. For text data, these embeddings can capture semantic meanings, syntactic roles, and even relationships between words.

Example: Word Embeddings

Let’s consider an example using word embeddings, which are a common type of vector embedding in natural language processing (NLP). One popular model for creating word embeddings is Word2Vec.

Imagine we have three words: “king,” “queen,” and “man.” The Word2Vec model can be trained on a large amount of text so that it learns to represent these words as vectors in a way that captures their relationships. For instance, it might capture gender relationships and royalty concepts. This can be visualized as vectors where “king” and “queen” are close to each other because both are royalty, and the vector from “king” to “queen” might be similar to the vector from “man” to “woman,” capturing the concept of gender.

Here is a simplified numerical example:

king → (0.5, 0.2, 0.9)

queen → (0.45, 0.25, 0.88)

man → (0.6, 0.1, 0.5)

These vectors might indicate that “king” and “queen” are more similar to each other (closer in vector space) than either is to “man,” reflecting a shared concept of royalty that “man” does not have.

If you are a basketball fan, let me give you an example that helped me understand better how this works

Imagine you’re trying to organize an extensive collection of basketball player trading cards based on different attributes:

scoring ability, defensive skills, and teamwork. Each card contains a lot of qualitative data (like player performance descriptions) that isn’t immediately comparable in a numerical way.

Vector embeddings are like a tool that converts all those qualitative traits into scores on a scale, creating a “profile” for each player as a vector (or a list of numbers). For example, a player’s ability to score might be on a scale from 0 to 1, their defensive skills might also be scaled similarly, and so on.

Here’s how it relates to basketball:

Similarity in Space: Just as you might group players who have similar playing styles or strengths, vector embeddings place similar items (like words, or in our case, players) close together in a vector space. For instance, LeBron James and Kevin Durant might be closer in this space because their playing styles and roles are similar, while a specialist like Stephen Curry might be slightly further because of his exceptional shooting skills.

Understanding Relationships: Just like understanding that a point guard like Chris Paul plays differently from a center like Joel Embiid, word embeddings help capture relationships and differences between words. In our basketball analogy, vector embeddings might help a machine understand and quantify how different roles or skills relate to each other in the context of player evaluations.

Example with Player Profiles:

LeBron James might be embedded as (0.9, 0.8, 0.9) where these numbers represent his scoring ability, defensive skills, and teamwork, respectively.

Stephen Curry might be (0.95, 0.7, 0.85) reflecting his outstanding scoring, good defense, and great teamwork.

Joel Embiid might be (0.85, 0.9, 0.75), indicating strong scoring, excellent defense, and good teamwork.

These vectors help in quickly comparing players, understanding player types, or even predicting game outcomes based on player attributes. Just like in advanced stats or fantasy basketball, where players are often reduced to a set of numbers to predict performance, vector embeddings provide a way to mathematically and visually analyze and predict based on learned relationships and attributes.

These databases enable efficient similarity searches, making them ideal for applications like recommendation systems, image and text retrieval, and more. Popular vector databases include Amazon OpenSearch Service, Amazon Aurora PostgreSQL-Compatible Edition, Amazon Neptune ML, Amazon MemoryDB for Redis, and Amazon DocumentDB

RBAC (Role-Based Access Control)

RBAC is a method of regulating access to computer or network resources based on the roles of individual users within an organization. It involves assigning permissions to roles rather than to individual users, simplifying the management of user permissions. Key components of RBAC include roles, permissions, and users. Implementing RBAC enhances security, ensures compliance, and simplifies the management of access control.

Using AWS Technologies to Integrate RBAC and FGAC in AI Applications

Controlling data access is important in the field of AI and machine learning since it not only solves security issues but also makes sure that the system complies with organisational and privacy laws. With improved security features like Role-Based Access Control (RBAC) and Fine-Grained Access Control (FGAC), AWS provides a stable foundation for implementing AI applications.

Architecture Overview

The core of our RAG application is a vector database that respects user roles and access privileges while processing, storing, and retrieving data. Data intake starts the process, which ends with safe data retrieval based on the permissions assigned to each user role.

1. Data Processing and Vector Generation:

AWS S3 stores raw data, which is ingested by AWS SageMaker for processing.

AWS SageMaker processes the data into vectors. Each vector generated from the raw data is tagged with metadata that includes user role information, dictating who can access this data. This is where embedding of data with security permissions begins.

2. Secure Data Storage and Access Control:

AWS OpenSearch is used for storing the generated vectors and facilitating efficient querying. It implements RBAC and FGAC to enforce security measures based on the metadata tags.

Role-Based and Fine-Grained Access Control Mechanisms

RBAC ensures that only users with appropriate roles can access specific operations within the AWS environment. For example, only data engineers might have the permission to upload new data and trigger processing workflows.

FGAC operates at a more granular level. In OpenSearch, FGAC uses the metadata associated with each vector to determine access rights. For instance, if a vector is tagged with the role ‘Researcher’, only users assigned the ‘Researcher’ role can query or view this data.

Vector Data Structure

Suppose we have a machine learning application that processes research articles to generate semantic vectors representing their content for a recommendation system. Here’s an example of how a vector and its associated metadata might be stored in AWS OpenSearch:

{

"document_id": "doc123",

"vector": [0.12, 0.23, 0.31, ..., 0.29], // Example of a 128-dimensional vector

"metadata": {

"created_by": "researcher01",

"role": ["Researcher", "Data Scientist"],

"department": "Machine Learning",

"access_level": "confidential"

}

}document_id: A unique identifier for the document.

vector: The numerical vector generated by AWS SageMaker from the document’s content.

metadata: Contains additional data about the document, crucial for access control:

created_by: The user or process that generated this vector.

role: An array that lists all the roles that are authorized to access this vector.

department: The department within the organization that owns or is associated with this data.

access_level: Defines the sensitivity of the data, influencing who can access it.

Role-Based and Fine-Grained Access Control Mechanisms

Here’s how RBAC and FGAC would work with this vector data:

RBAC (Role-Based Access Control):

In RBAC, roles are predefined with certain permissions. For example, users assigned the “Data Scientist” role may have permissions to access vectors across multiple projects, whereas “Researchers” may only access vectors they or their team created.

FGAC (Fine-Grained Access Control):

FGAC is applied at the document level in OpenSearch. When a query is made, OpenSearch evaluates the role and access_level fields in the metadata against the querying user’s role and their clearance.

For example, if a user with the role “Intern” queries for vectors, the system checks the role field of each vector’s metadata. Since “Intern” is not listed, vectors with “Researcher” and “Data Scientist” roles in their metadata would not be returned to this user.

Querying with Access Controls

When a user submits a query, the process might look like this:

User Query: A “Data Scientist” queries for vectors related to a specific machine learning topic.

Authentication and Authorization: The user’s role is authenticated using their security credentials (e.g., via Cognito).

Query Processing: OpenSearch processes the query. It first filters vectors by checking if the user’s role matches any of the roles listed in the metadata.role field of each document.

Data Retrieval: Only vectors whose metadata matches the user’s role and meets other criteria (like access_level) are retrieved and returned to the user.

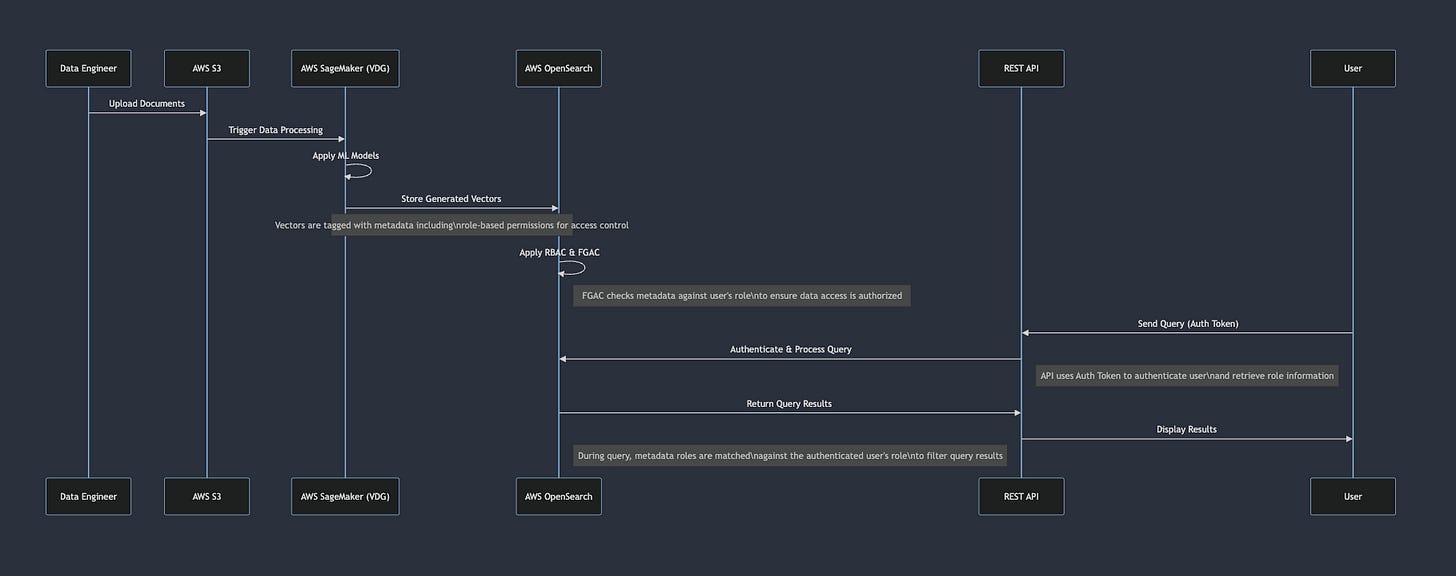

Below I rapresented with a sequence diagram the flow of this User Journey

Data Engineer Uploads Documents:

Documents are uploaded to AWS S3 by the Data Engineer, initiating the data processing sequence.

Trigger Data Processing in SageMaker:

The new documents in S3 automatically trigger AWS SageMaker, which begins the vector generation process.

Apply ML Models in SageMaker:

AWS SageMaker applies machine learning algorithms to convert raw data into vectors, encapsulating complex data attributes into a numerically accessible format.

Store Generated Vectors with Metadata in OpenSearch:

Once vectors are generated, they are stored in AWS OpenSearch along with metadata. This metadata includes role-based permissions, specifying which roles can access or interact with the vectors.

Apply RBAC & FGAC in OpenSearch:

As vectors are stored, AWS OpenSearch applies Role-Based Access Control (RBAC) and Fine-Grained Access Control (FGAC). These controls use the embedded metadata to manage access based on the roles specified within the metadata.

User Sends Query via REST API:

The user sends a query through the REST API, including an authentication token that helps verify their identity and associated role.

Authenticate & Process Query in OpenSearch:

The REST API authenticates the user’s token, retrieves role information, and passes the query to AWS OpenSearch, where the user’s role is checked against the roles allowed in the vector metadata.

Return Query Results:

OpenSearch filters the query results based on the FGAC settings, ensuring that the user only receives data they are authorized to access based on their role.

Display Results to User:

Finally, the filtered results are sent back to the user through the REST API, completing the user’s request.

Note on Metadata and Role Matching:

Embedding Roles in Metadata: When vectors are stored in OpenSearch, they are tagged with metadata that includes specific role-based permissions. This metadata is crucial for controlling access to the data.

Matching Roles During Query Processing: During the query process, OpenSearch checks the metadata against the authenticated user’s role. This ensures that each user only accesses vectors they are authorized to see, aligning with security and compliance requirements.

In order to protect user privacy, assure compliance, and preserve data security, AI applications must be equipped with strong Role-Based and Fine-Grained Access Control methods. By combining AWS services like S3 for data management, OpenSearch for safe data storage and querying, and SageMaker for vector generation, enterprises can build complex RAG systems that strike a compromise between strict access rules and sophisticated data retrieval capabilities. This methodology not only enables people to effectively engage with AI-driven insights and data, but it also protects sensitive data by implementing stringent access controls. Through the integration of security throughout the whole data lifecycle, from retrieval to ingestion, organisations may optimise AI technologies while upholding optimal standards for data governance and security. This elaborate arrangement serves as an example of how AI can be both powerful and secure.